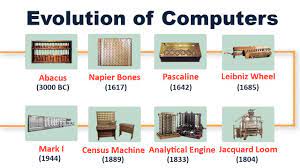

The Evolution of Computers: From Abacus to Present

Computers have come a long way since the invention of the abacus, often considered the first computing device. The evolution of computers over centuries has been marked by significant advancements in technology, leading to the powerful and versatile machines we use today.

Abacus

The abacus, developed thousands of years ago, was a simple counting tool used for basic arithmetic calculations. It consisted of beads or stones on rods that represented different numerical values. While primitive compared to modern computers, the abacus laid the foundation for computational thinking.

Mechanical Calculators

In the 17th century, mechanical calculators such as Blaise Pascal’s Pascaline and Gottfried Wilhelm Leibniz’s stepped reckoner were invented. These devices could perform arithmetic operations more efficiently than manual methods, paving the way for automated computation.

Early Computers

The mid-20th century saw the development of electronic computers like ENIAC (Electronic Numerical Integrator and Computer) and UNIVAC (Universal Automatic Computer). These early computers were massive machines that used vacuum tubes and punched cards to perform calculations.

Transistors and Integrated Circuits

The invention of transistors in the late 1940s revolutionized computing by replacing bulky vacuum tubes with smaller, more efficient components. The subsequent development of integrated circuits further miniaturized electronic components, leading to smaller and more powerful computers.

Personal Computers

In the 1970s and 1980s, personal computers like the Apple II and IBM PC brought computing power to individuals and businesses. These early PCs featured graphical interfaces, storage devices, and software applications that made computing more accessible to a wider audience.

Modern Computers

Today’s computers are incredibly fast, compact, and capable of performing complex tasks with ease. Advances in hardware technology, such as solid-state drives and multicore processors, have made modern computers indispensable tools for work, communication, entertainment, and research.

From Abacus to AI: Tracing the Revolutionary Evolution of Computers

- The abacus, an ancient calculating tool, is considered one of the earliest forms of computers.

- Mechanical calculators like Pascal’s calculator and Leibniz’s stepped reckoner were important precursors to modern computers.

- The invention of the Analytical Engine by Charles Babbage in the 19th century laid the foundation for modern computing concepts.

- The first electronic digital computer, ENIAC, was developed in the 1940s and marked a significant advancement in computing technology.

- The development of transistors in the 1950s led to the creation of smaller and more powerful computers compared to vacuum tube-based machines.

- Integrated circuits (microchips) revolutionized computing by enabling greater processing power and miniaturization of components.

- The advent of personal computers in the 1970s and 1980s made computing more accessible to individuals and businesses.

- Advancements in artificial intelligence, quantum computing, and other cutting-edge technologies continue to shape the evolution of computers today.

The abacus, an ancient calculating tool, is considered one of the earliest forms of computers.

The abacus, an ancient calculating tool, holds a significant place in the evolution of computers as one of the earliest forms of computational devices. Used for basic arithmetic calculations, the abacus paved the way for more sophisticated computing technologies that would emerge over centuries. Its simple yet effective design laid the groundwork for the development of modern computers, showcasing how innovation in early tools has shaped the technological landscape we navigate today.

Mechanical calculators like Pascal’s calculator and Leibniz’s stepped reckoner were important precursors to modern computers.

Mechanical calculators such as Pascal’s calculator and Leibniz’s stepped reckoner played a crucial role as important precursors to modern computers. These early devices demonstrated the potential for automated computation and laid the groundwork for the development of more sophisticated computing machines. By introducing concepts like mechanical calculation and automated arithmetic operations, these mechanical calculators set the stage for the evolution of computers from simple counting tools to the powerful and versatile machines we rely on today.

The invention of the Analytical Engine by Charles Babbage in the 19th century laid the foundation for modern computing concepts.

The invention of the Analytical Engine by Charles Babbage in the 19th century marked a pivotal moment in the evolution of computers. Babbage’s visionary design laid the foundation for modern computing concepts, introducing key elements such as programmability, memory storage, and logical operations. Although the Analytical Engine was never fully realized during Babbage’s lifetime, its innovative design and theoretical principles paved the way for future generations of computer scientists and engineers to develop the powerful and sophisticated computers we rely on today.

The first electronic digital computer, ENIAC, was developed in the 1940s and marked a significant advancement in computing technology.

The development of the first electronic digital computer, ENIAC, in the 1940s represented a pivotal moment in the evolution of computing technology. ENIAC’s groundbreaking design utilizing electronic components and programmability set a new standard for computational power and efficiency. This monumental achievement laid the foundation for further advancements in computer technology, shaping the trajectory of computing into the modern era.

The development of transistors in the 1950s led to the creation of smaller and more powerful computers compared to vacuum tube-based machines.

The development of transistors in the 1950s marked a significant milestone in the evolution of computers, enabling the creation of smaller and more powerful machines compared to their vacuum tube-based predecessors. Transistors replaced bulky and less reliable vacuum tubes, offering a more efficient and compact solution for electronic components. This breakthrough paved the way for the miniaturization of computers, leading to advancements in speed, reliability, and overall performance that have shaped the modern computing landscape we know today.

Integrated circuits (microchips) revolutionized computing by enabling greater processing power and miniaturization of components.

Integrated circuits, also known as microchips, have played a pivotal role in the evolution of computers from the abacus to the modern era. These tiny electronic components revolutionized computing by enabling greater processing power and miniaturization of components. By integrating multiple electronic functions onto a single chip, integrated circuits made computers smaller, faster, and more energy-efficient. This breakthrough technology has been instrumental in shaping the digital landscape we live in today, powering everything from smartphones and laptops to supercomputers and beyond.

The advent of personal computers in the 1970s and 1980s made computing more accessible to individuals and businesses.

The advent of personal computers in the 1970s and 1980s marked a significant milestone in the evolution of computing, making this technology more accessible to individuals and businesses alike. With user-friendly interfaces, storage capabilities, and a range of software applications, personal computers revolutionized how people interacted with technology on a daily basis. This era laid the foundation for the widespread integration of computers into various aspects of our lives, shaping the way we work, communicate, and engage with information.

Advancements in artificial intelligence, quantum computing, and other cutting-edge technologies continue to shape the evolution of computers today.

Advancements in artificial intelligence, quantum computing, and other cutting-edge technologies play a pivotal role in shaping the ongoing evolution of computers. These innovations are pushing the boundaries of what computers can achieve, enabling them to process vast amounts of data at unprecedented speeds, simulate complex scenarios, and even learn from experience. As we embrace these transformative technologies, we are witnessing a new chapter in the history of computing that promises to revolutionize industries, drive scientific discoveries, and redefine the way we interact with technology in the present and future.